Raylene Yung to depart role as Technology Modernization Fund’s top official

Technology Modernization Fund Executive Director Raylene Yung is stepping down after more than two years leading the program, the General Services Administration said Friday.

“Raylene has been instrumental in building the Technology Modernization Fund into the in-demand resource it is for federal agencies looking to modernize their IT systems,” GSA Administrator Robin Carnahan said in a statement. “We know that the Fund will continue to be a smart way we can make investments that deliver greater security, efficiency and accessibility to the American people.”

During her time leading the program, Yung developed “the foundational frameworks and processes that have enabled the Fund to mature and scale,” GSA said in a release. The program has also grown under her leadership.

The TMF, which is housed within GSA, is focused on improving technology across the government and manages more than $1.2 billion in funding. It’s currently invested roughly $770 million in 47 projects across 27 federal agencies.

“The team has significantly improved how it operates and supports agencies in their modernization journeys, and has become a more effective organization under her leadership,” the GSA said.

Until a permanent replacement is identified, the GSA said Jessie Posilkin will serve as acting executive director. Posilkin has been director of the TMF’s customer experience portfolio since August 2022.

In a statement, Yung said it’s “difficult to leave” but she was pleased that Posilkin would take over when she departs.

“Her deep knowledge of the TMF, strong partnership with the TMF Board, and dedication to helping agencies make systems and services work better, faster and more seamlessly across the federal sector will ensure a smooth transition,” Yung said of Posilkin.

Policy experts see missing pieces in agencies’ AI use case disclosures

As the government seeks to regulate artificial intelligence, its work to track applications of the technology across federal agencies would benefit from improvements in formatting, transparency and information included, experts and researchers told FedScoop.

Understanding AI systems — which can be integrated into everything from health care software to public housing security systems — and how they’re used is critically important. Yet federal agencies’ AI use case inventories, which were required by a 2020 executive order and remain one of the primary government programs to facilitate information sharing about this technology, are imperfect, those policy experts tell FedScoop.

“Inventories are a step, right?,” said Frank Torres, a civil rights technology fellow at the Leadership Conference on Civil and Human Rights. “We want to make sure that when inventories are done, that there’s some transparency there, that people can understand how the technology is being used and see the pathway for how it was decided that that particular technology was best for that particular purpose.”

The inventories have been the subject of growing attention, especially in the wake of a 2022 Stanford report highlighting poor and patchwork compliance. FedScoop has also reported on myriad issues with the disclosures. Still, they could be an important part of the White House’s planned executive order and the Office of Management and Budget’s coming guidance on the technology. Notably, one of the initial intentions for inventories was to help inform policymaking, a former White House official who helped craft the executive order recently told FedScoop.

Michael Kratsios, managing director at Scale AI and the White House’s chief technology officer under former President Donald Trump, pointed to the importance of previous AI legislation and executive orders during a Wednesday House hearing before Oversight & Investigations and Research & Technology subcommittees of the Committee on Science, Space and Technology.

“I think the biggest challenge is that a lot of the requirements from the executive orders and from the legislation are important foundational pieces that future regulatory structures are built on top of,” Kratsios said in response to a question from Rep. Frank Lucas, R-Okla., about the unfulfilled aspects of those existing policies.

Without a clear understanding of potential use cases in agencies or assessments of where AI will impact the regulatory regime, it’s harder to proceed with crafting the regulation itself, Kratsios said.

The Biden administration recently released a consolidated list of the more than 700 AI use cases disclosed by federal agencies in their individual inventories that, for the first time, makes the information available in a single spreadsheet. Prior to that, agencies’ public inventories varied in terms of format, making them difficult to compare and analyze. But while the new consolidated inventory makes the information available in one place, it lacks standardized responses and omits some of the information that agencies previously reported.

Notably, the number of large, parent-level agencies with known AI use cases that have published an inventory has grown since the initial Stanford report, according to Christie Lawrence, a co-author of the report. Still, just 27 agencies are listed on AI.gov for these inventories, compared to the over 70 parent-level agencies that the researchers estimated were subject to this requirement. There are other issues with the inventories, too.

“Some agency inventories do not include all the required — and instructive — elements detailed in the CIO guidance,” Lawrence said in an email to FedScoop. “Furthermore, the federal government shouldn’t lose the benefits from its work to-date — keeping inventories from prior years online can help the public, agencies, and researchers understand the trends of the federal government’s use of AI.”

Experts say that there needs to be more clarity about the ideal process for developing and building these use cases. Guidance from the CIO Council states that federal agencies impacted by the executive order are supposed to exclude “sensitive use cases” that might jeopardize privacy, law enforcement, national security or “other protected interests.” At the same time, agencies are ultimately responsible for the accuracy of their inventories, an OMB spokesperson told FedScoop in August.

There’s also concern, which has been highlighted by the Stanford report authors as well as other researchers, that many agencies are excluding use cases from their inventories that they’ve publicly disclosed elsewhere. And while the Department of Transportation lists use cases that have been publicly redacted, it’s not clear how many AI use cases, in total, most agencies have at their disposal.

“There’s a question about how agencies are deciding what gets included and what doesn’t, and especially when there’s other sources of information that suggests that the federal agency is using AI in a particular way,” said Elizabeth Laird, the Center for Democracy & Technology’s director of equity in civic technology.

Another potential area of improvement is the amount of information provided about use cases. In advance of an upcoming executive order on AI, a collection of civil rights organizations wrote to the Biden administration in August urging the White House to expand AI inventories to include details gesturing to compliance with the Blueprint for an AI Bill of Rights, a nonbinding set of principles for the technology released by the Office of Science and Technology Policy in October 2022.

The letter also suggested that each agency be required to submit an annual report documenting its progress in following those principles.

“As the federal government increasingly thinks about AI procurement, including to fulfill requirements from the AI in Government Act, the inventories can help the federal government gain a better understanding of how to better acquire AI tools,” Lawrence added.

Relatedly, tech policy experts also want more information about vendors and contractors who might be brought in by the government to build or provide AI tools. While this information is included in some agencies’ individual inventories — and is required by the most updated guidance from the CIO Council — it is not included in the consolidated database recently published on AI.gov.

“A contract ID or the company should make that [more] valuable,” said Ben Winters, senior counsel at the Electronic Information Privacy Center. “This should be able to be like a fiscal accountability tool, and as well as like an operational accountability tool.”

Inventories would be better, Torres added, if they included more information about how an AI system was tested. Listing all collaborators on a project would also be helpful, noted Anna Blue, a researcher at the Responsible Artificial Intelligence Institute who has reviewed these inventories.

Of course, broader transparency into government use of AI remains a challenge. Several experts have pointed out that records requests that members of the public can file under the Freedom of Information Act work well for documents, but don’t inherently lend themselves to accessing information about AI systems.

“Another thing I would call out is the need for a process by which the public can solicit more information,” Blue said. “A solicitation or comment process isn’t part of the EO, but I think it would help create a cycle of helpful feedback between agencies and the public.”

Senators introduce bipartisan bill to improve federal agencies’ customer service

A bipartisan trio of senators has introduced legislation intended to improve and streamline the customer service provided by federal agencies, targeting shorter wait times and better digital services.

The Improving Government Services Act, sponsored by Sens. Gary Peters, D-Mich., James Lankford, R-Okla., and John Cornyn, R-Texas, would require certain agencies to develop an “annual customer experience action plan” within a year of enactment of the bill, providing details on how to offer a better and more secure experience for taxpayers by adopting best customer service practices from the private sector.

“Taxpayers must be able to easily and efficiently reach federal agencies when they have questions about services or benefits,” Peters, chairman of the Senate Committee on Homeland Security and Governmental Affairs, said in a statement. “My commonsense bipartisan bill would require agencies to adopt customer service best practices that limit wait times and use callbacks to ensure taxpayers receive support in a timely manner.”

The bill, which will get a committee vote next week, would require federal agencies to develop a written strategy to improve customer experience. That strategy would include a plan to adopt customer service practices such as online services, improved protections for personally identifiable information, telephone call back services and employee training programs.

The legislation would direct the White House’s Office of Management and Budget to designate certain federal agencies as “high-impact service providers,” such as those that deliver key services to the public or fund state-based programs.

Federal agencies that deal with health care, public lands, loan programs, passport renewal, tax filing, customs declarations and other such key programs are likely to be designated as high impact.

“Some agencies have already successfully implemented private-sector best practices, but we need them governmentwide,” Lankford said. “Providing good customer service doesn’t have to be difficult. Let’s get this nonpartisan bill to the finish line so interacting with the federal government is less frustrating for the public.”

The bill also references the 21st Century Integrated Digital Experience Act, also known as the IDEA Act, and its push for the expansion of easy-to-use digital services through which Americans can communicate with federal agencies and programs while also maintaining in-person, telephone, postal mail and other contact options.

Nearly five years after the IDEA Act was first signed into law in 2018, OMB last month issued guidance for agencies to deliver on implementation of the legislation.

The new legislation is scheduled for a markup and vote in the Senate Homeland Security and Government Affairs Committee on Oct. 25.

GSA to add facial recognition option to Login.gov in 2024

After determining early last year that it would hold off on using facial recognition as part of its governmentwide single sign-on and identity verification platform Login.gov, the General Services Administration in 2024 will add an option for system users to verify their identity with “facial matching technology,” the agency announced Wednesday.

GSA’s Technology Transformation Services will roll out a “proven facial matching technology” the relies on “best-in-class facial matching algorithms” in 2024 that follows the National Institute of Standards and Technology’s 800-63-3 Identity Assurance Level 2 (IAL2) guidelines, according to a GSA blog post.

Every Cabinet-level federal agency now uses Login.gov for secure sign-in and identity verification services in some way, according to GSA.

The addition of facial recognition is one of three new options that participating agencies can use beginning next year to verify the identity of citizens. GSA is also adding an in-person option through which users can verify their identity at a local Post Office. And, the agency will add another digital option that doesn’t use facial recognition “such as a live video chat with a trained identity verification professional,” the blog post says.

All three additional forms will be IAL2-compliant, GSA wrote.

The agency found itself in hot water earlier this year after its inspector general issued a report alleging that it misled customers by billing agencies for IAL2-compliant services, even though Login.gov did not meet those standards.

At the same time, there’s been broader controversy around the efficacy of facial recognition technologies and how the technology commonly produces biased results against minorities. Because of that, GSA in early 2022 determined it wouldn’t use facial recognition in Login.gov until it felt it could confidently do so in a responsible and equitable manner.

Earlier this month, Sonny Hashmi, commissioner of GSA’s Federal Acquisition Service, which houses TTS, told FedScoop how an ongoing equity study on remote identity proofing would ultimately inform the evolution of Login.gov, as well as other programs across government.

“Our hope is that as we do the study, in participation with Americans across all demographics, we get valuable data that all agencies can use to make better choices in terms of how to enable these remote biometric and digital technologies so that if there are inherent challenges for certain demographics or populations, that we can proactively address them and continue to make that access more prolific and more easy to use for all Americans,” Hashmi said on an episode of the Daily Scoop Podcast.

That study is ongoing and GSA aims to recruit as many as 4,000 participants across as many demographics as possible, he said.

“We have a responsibility as public servants to get this right. Now, ‘get this right’ means that we have to continue to increase the fidelity of the capability that we need to make available through programs such as Login.gov, to make sure that agencies can leverage and access the best-of-breed technologies when they’re looking to verify identities when they’re looking to prevent fraud in their administration of grant programs and other such programs,” Hashmi said.

He continued: “However, we need to do it thoughtfully because we don’t have the luxury of leaving parts of the population behind. We have to do it in a way that addresses, that serves all Americans equally. And that is our commitment that we have to stand by. And that requires thoughtful assessments, thoughtful analysis, and deliberate decision-making, that we can stand behind.”

IRS: Free online filing program will be available in 2024 for eligible taxpayers in 13 states

Select taxpayers in 13 states will have the option to participate next year in the IRS’s electronic Direct File pilot program, the agency announced Wednesday, marking the latest step in its efforts to simplify filing season.

As part of the “limited-scope pilot,” taxpayers in Arizona, California, Massachusetts and New York will be presented with the option to electronically file their federal returns in 2024 directly with the agency at no cost.

Additionally, taxpayers in nine states with no income tax — Alaska, Florida, New Hampshire, Nevada, South Dakota, Tennessee, Texas, Washington and Wyoming — may also be eligible to take part in the program.

IRS Commissioner Danny Werfel said in a statement that the agency will work closely with officials in the four participating states, whose revenue departments signed separate Memorandums of Understanding with the IRS last month. Information-gathering from the pilot will inform the “future direction” of Direct File, Werfel added.

While all states were invited to join the pilot, “not all states were in a position” to do so in 2024, the agency noted. Taxpayers with “relatively simple returns” in the 13 states will be eligible to participate. Those who receive the Earned Income Tax Credit and the Child Tax Credit can also partake in the Direct File pilot.

The IRS was tasked with studying the feasibility of a free, direct filing tax program as part of the agency’s nearly $80 billion funding infusion via the Inflation Reduction Act. The Direct File pilot program comes after the IRS delivered a report to Congress in May that detailed costs, benefits and operational challenges, all of which will be evaluated in the pilot program.

“We have more work in front of us on this project,” Werfel said. “The Direct File pilot is undergoing continuous testing with taxpayers to identify and resolve issues to ensure it’s user-friendly and easy to understand. We continue to finalize the pilot details and anticipate more changes before we launch for the 2024 tax season.”

How wireless tech and Network-as-a-Service offer fast-track to modernization

Federal IT officials have been actively updating their communications networks in recent years to take advantage of the convergence of IP-based voice, video, and data — and meet federal requirements to modernize their systems using the government’s Enterprise Infrastructure Solutions contract vehicle.

What agencies have underestimated, however, is how rapidly network infrastructure is evolving. The expanding capabilities of 5G, Multi-Access Edge Computing (MEC), Fixed Wireless Access (FWA) that uses 4G and 5G radio spectrum, small-cell Wi-Fi extension technology, low-latency satellite connections and higher capacity broadband capabilities are collectively redefining the nature of network infrastructure.

Collectively, those capabilities offer civilian and defense agencies a new generation of network versatility – and the ability to reimagine and rearchitect how they support their workforces around the globe asserts a new report from Scoop News Group underwritten by Verizon.

The report notes that federal agencies have made monumental strides in modernizing parts of their IT operations. However, without also modernizing their underlying network services to leverage those cloud capabilities, “agencies risk the prospects of maintaining the equivalent of a newly renovated office tower that kept the aging, sub-par wiring and plumbing in place behind the walls,” the report suggests.

That prevents agencies from taking advantage of more agile networking and security capabilities and leaves them vulnerable to increasing strains on their networks, argues Brian Schromsky, Managing Partner, 5G Public Sector at Verizon, in the report.

He points to several critical factors that make network modernization more crucial than ever, including:

- Growing pressures to handle data effectively.

- The need for ubiquitous and resilient connectivity.

- Support for a mobile and hybrid workforce.

- Escalating risks of security breaches.

Fortunately, just as agencies discovered the inherent advantages of adopting cloud-based services for their infrastructure, platforms and applications, agencies have access to many of those same advantages by adopting a Network-as-a-Service (NaaS) model and by harnessing a new generation of wireless networking capabilities, the report says.

Recent advances in network technology are poised to give agencies a new kind of advantage by being able to augment and even leapfrog legacy networks rather than merely upgrade them, says Schromsky.

“It’s now possible to establish high-speed, secure and reliable connectivity with fixed wireless access without requiring cable or fiber to run underground. Private networking is a great choice for government agencies, as well as the private sector partners who help them build, operate, and maintain public infrastructure because it provides high levels of security and reliability,” he says in the report.

NaaS solutions, meanwhile, like those available from Verizon, allow enterprises to adopt agile, automated network platforms consumed as a service. For agencies, that means moving from vision to implementation more quickly — and connecting users to applications and data across evolving cloud and work environments more reliably.

The explosion of data and metadata accompanying artificial intelligence and machine learning work requires advanced levels of automation and network function virtualization (NFV) to optimize performance, the report argues. Turning over the mechanics of network management to a NaaS provider and taking advantage of newer wireless capabilities, offer agencies the means to roll out greater bandwidth capacity and increase network security more quickly, and focus more on mission outcomes and less on configuration bottlenecks.

This article was produced by Scoop News Group, for FedScoop and sponsored by Verizon.

CGI lands multi-year EPA contract on IT enterprise development

A federal government-focused subsidiary of CGI Inc. announced on Wednesday that it won a multi-year contract worth more than half a billion dollars to provide information technology services to the Environmental Protection Agency.

The agreement is meant to boost the agency’s enterprise operations and work in support of “human health and the environment,” according to a press release shared by the company.

“We must confront the nation’s most urgent health and environmental challenges today by expanding our range of innovative technology capabilities, aligning those capabilities to our mission, and optimizing our overall mission support operations,” EPA Chief Information Officer Vaughn Noga said in a statement included in that release.

The Information Technology Enterprise Development contract “provides new opportunities to meet these challenges through technical innovation and strengthens our efforts to protect human health and the environment for the American public,” he added.

The ITED program is supposed to help automate aspects of EPA’s business operations and introduce emerging technologies, among other goals. Notably, CGI has worked with the EPA on a variety of other technology programs, including the Central Data Exchange, a key part of the agency’s electronic data reporting system.

The contract is a reminder that software and other technology play a major role in assisting environmental regulators. It also comes amid a range of other IT challenges at the agency. For example, the Government Accountability Office recently flagged that the agency’s air quality tracking systems require major updates. Earlier this summer, the agency’s watchdog found that a system used to monitor radiation included vulnerabilities.

USPTO CIO says AI adoption is held back by government culture and bureaucracy

The top tech official at the U.S. Patent and Trademark Office in charge of handling data around millions of patents said Tuesday that artificial intelligence adoption faces significant barriers within the federal government due to the current culture and bureaucracy.

Jamie Holcombe, chief information officer in the patent office, said that when it comes to adoption of emerging technologies like AI, leadership within the federal government needs to change the culture and incentive structure of the workforce.

“Culture, culture, culture. I don’t care about the tech. We can solve it. We prove that you can. But it’s the culture, if they’re willing to receive [new tech]. Unless there’s a burning platform, a lot of people will say, ‘I’ll [have] somebody else do it, I’ll figure it out later,’” Holcombe said during the Google Public Sector Forum hosted by Scoop News Group in Washington, D.C.

“Especially with government bureaucracy, we have so many people that are just incentivized to sit there and punch their card, turn the paper or, you know, sign this and put it over here, I did my job,” he added. “We need to change and challenge the status quo, we need to get a sense of urgency and incentive for our government workers.”

The USPTO in 2021 sent its top engineers to Google to be certified in TensorFlow and develop neural network feedback loops for patent examiners to rate algorithms, as well as to apply machine learning and AI to patent classification, search and quality.

Holcombe was highly critical of the federal government’s approach to innovation and long-term modernization during the Google forum.

“Our budgeting process is stupid. Our procurement is stupid. Everything we do in the government is pretty stupid, when you compare it to the commercial world, right?” Holcombe said. “There’s so many lessons that no one is willing to take. Who in their right mind would run a commercial enterprise or operation using a budget that was conceived three years ago?”

He also highlighted key differences between how the federal government and the private sector operate when it comes to emerging technologies like AI.

“If we ran our government like we ran Silicon Valley, we’d be much more efficient,” Holcombe said. “Can you imagine the Silicon Valley guy saying, ‘Wait a second, I have to fill all my compliance things before I prove my product works in the marketplace? What are you freaking kidding me?’”

Google Public Sector Managing Director Aaron Weis pushed back on Holcombe regarding government compliance.

“We do compliance for the government, so we actually have to get all our Google products accredited,” Weis said. “So we’re gonna fill out all the government’s compliance forms, but we might use AI to do it now.”

Federal CISO says White House targeting AI procurement as part of conversation on looming executive order, guidance

As the White House inches closer to the release of an executive order on artificial intelligence and guidance for federal agencies on responsible use of the technology, the federal chief information security officer said AI procurement is something Biden administration officials are “actively discussing” as part of that conversation.

Speaking Tuesday at the Google Public Sector Forum, presented by Scoop News Group, Chris DeRusha, federal CISO and White House deputy national cyber director, noted that government authorization and assessment processes will be especially important when it comes to AI procurement.

“How do we ensure that we have an agile way of assessing the appropriate tools for government use and government-regulated data types? We can’t not do that,” DeRusha said.

“We understand everybody’s really wanting to jump into the latest tools. But look, you know, some of these companies aren’t fully vetted yet, they are new entrants, and we have to ensure that you’re responsible for protecting federal data,” he added.

DeRusha said the government has “to go full bore in learning how to use this technology because our adversaries will do that.” To that end, the Biden administration last week released a database on AI.gov detailing hundreds of AI use cases within the federal government.

Having that database should enable agencies to better drill down on specific AI applications, perform tests, launch pilot programs and ultimately see where the government can get “maximum benefit.” DeRusha cited better safety outcomes in transportation agencies as one possibility.

And while “unintentional misuse” of AI worries DeRusha, ultimately the “benefits are so positive” for federal agencies when it comes to the technology.

Also top of mind for DeRusha is the implementation of the Biden administration’s National Cybersecurity Strategy, which was released in March, and the White House’s National Cyber Workforce and Education Strategy, published in July.

DeRusha touted the benefits of having public-facing plans that note agency-specific responsibilities, quarterly targets and other details, essentially serving as a check on government officials to hold “ourselves accountable to ensure that we’re really making progress on all these things.”

And after “decades of investments in addressing legacy modernization challenges,” DeRusha said now is the time for the government to prepare for “massive” long-term challenges, including, for example, those related to AI and the White House’s Counter-Ransomware Initiative, which now involves “almost 50 countries.”

“We’ve taken on pretty much every big challenge that we’ve been talking about for a couple of decades,” DeRusha said. “And we’re taking a swing and making” progress.

Government gears up to embrace generative AI

Artificial Intelligence (AI) is on the brink of transforming government agencies, promising to elevate citizen services and boost workforce productivity. As agencies gear up to integrate generative AI into their operations, understanding opportunities, concerns and sentiments becomes crucial for preparing to transition into this AI-driven era of government operations.

A new report, “Gauging the impact of Generative AI on Government,” finds that three-fourths of agency leaders polled said that their agencies have already begun establishing teams to assess the impact of generative AI and are planning to implement initial applications in the coming months.

Based on a new survey of 200 prequalified government program and operations executives and IT and security officials, the report identified critical issues and concerns executives face as they consider the adoption of generative AI in their agencies.

The report, produced by Scoop News Group for FedScoop and underwritten by Microsoft, is among the first government surveys to assess the perceptions and plans of federal and civilian agency leaders following a series of White House initiatives to develop safeguards for the responsible use of AI. The U.S. government recently disclosed that more than 700 AI uses cases are underway at federal agencies, according to a database maintained by AI.gov.

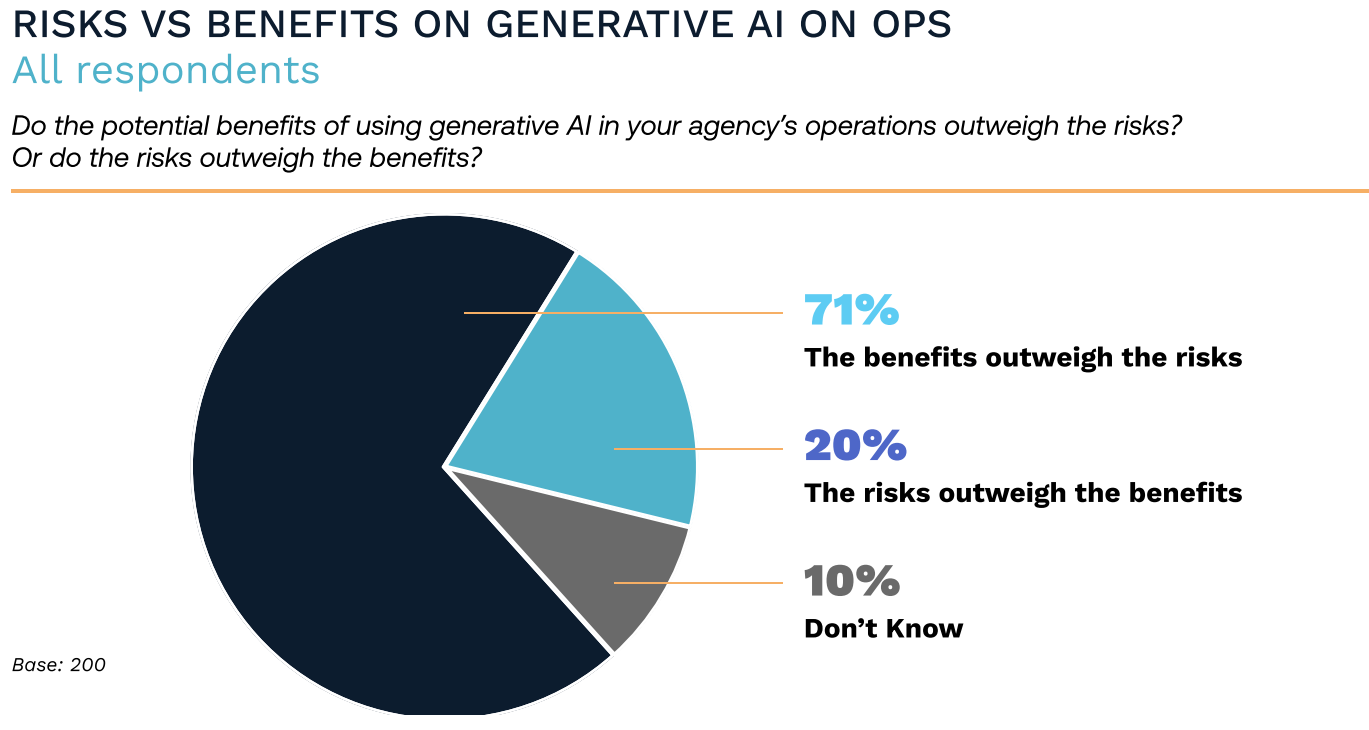

The findings show that a majority of respondents are actively exploring the application of generative AI. An overwhelming 84% of respondents indicated that their agency leadership considers understanding the impact of generative AI as a critical or important priority level for agency operations. Significantly, 71% believe that the potential advantages of employing generative AI in their agency’s operations outweigh the perceived risks.

However, the survey also highlights several areas of hesitation that warrant attention. The top concerns related to the risks of using generative AI include a lack of controls to ensure ethical/responsible information generation, a lack of ability to verify/explain generated output and potential abuse/distortion of government-generated content in the public domain.

These are unquestionably valid concerns that agencies must confront head-on. Encouragingly, many agencies are already taking proactive steps to address these challenges, with a significant proportion (71%) having established enterprise-level teams or offices devoted to developing AI policies and resources—a testament to their commitment to effective AI governance.

Impact on agency functions

Regardless of concerns, generative AI is poised to significantly impact various agency functions and use cases. A significant portion of agencies (nearly half) are gearing up to explore generative AI’s potential within the next 12 months.

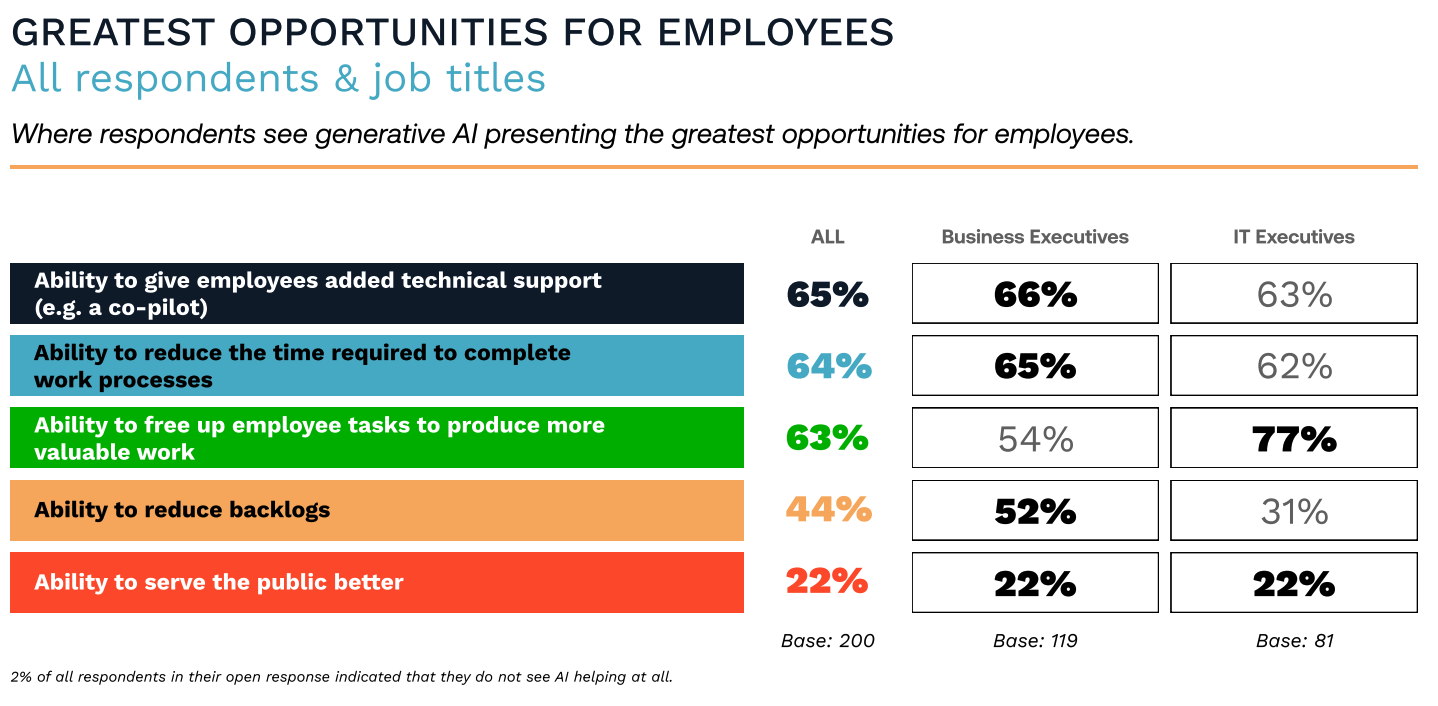

In particular, leaders are eyeing improvements in their business operations and workflows, with 38% expressing confidence that generative AI can enhance efficiency. Close to two-thirds of respondents said they believe generative AI was likely to give employees the ability to reduce the time required to complete work processes and free up time to produce more valuable work.

Another domain where generative AI is gaining traction is mission intelligence and execution. Over half of the respondents are either assessing or planning to evaluate its impact, with a similar percentage looking to implement AI to support mission activities. Confidence levels in generative AI’s ability to deliver value and cost savings in this realm are encouraging.

Interestingly, the survey underscores differing points of view about implementation priorities between business and IT executives. Roughly half of the agency business executives polled expect one or more generative AI applications will be rolled out in the next 6-12 months for data analytics, IT development/cybersecurity, and business operations. In contrast, roughly two-thirds of IT executives, presumably closer to implementation rhythms, see business operations getting new generative AI applications in the next 6-12 months, followed by case management cybersecurity use cases.

However, the findings suggest a deeper story about the need for training, according to Steve Faehl, federal security chief technology officer at Microsoft, after previewing the results. Half of all executives polled (and 64% of those at defense and intelligence agencies) cited a lack of employee training to use generative responsibly as a top concern but only 32% noted the need to develop employee training programs as a critical employee concern, suggesting a potential disconnect in how organizations view AI training.

“Effective training from industry could close one of the biggest risks identified by the U.S. government in support of responsible AI objectives and government employees,” he said. “Those who develop controls having an understanding of risk from their own use cases will create a fast path for controls for other use cases,” he said. As a best practice, “experimentation, planning, and policymaking should be iterative and progress hand-in-hand to inform policymakers of real-world versus perceived risks and benefits.”

Recommendations

As government agencies balance innovation and responsibility in serving the public’s interest, generative AI will require agency leaders to confront a new set of dynamics and begin recalibrating several strategic decisions in the near term, the study concludes. Among other conclusions from the findings, the study recommends agency executives prepare for a faster pace of change and establish flexible governance policies that can evolve as AI applications evolve.

It also recommends facilitating environments for experimentation. By allowing a broad range of employees to experience generative AI’s potential, agencies stand to learn faster and address lingering worries about job security and satisfaction.

In addition to prioritizing use cases, the study recommends that agency leaders devote greater attention to training employees to use generative AI responsibly and capitalize on emerging resources, including NIST’s AI Risk Management Framework and resources assembled by the National AI Initiative.

Download “Gauging the Impact of Generative AI on Government” for the detailed findings.

This article was produced by Scoop News Group for FedScoop and sponsored by Microsoft.