- Exclusive

- Emerging Tech

FAA says it does not use ChatGPT in any systems

The Federal Aviation Administration on Wednesday denied using the AI-powered language model ChatGPT in any of its systems.

In a statement to FedScoop, the agency said the chatbot is not being deployed by the department, including in mission-critical air traffic control systems.

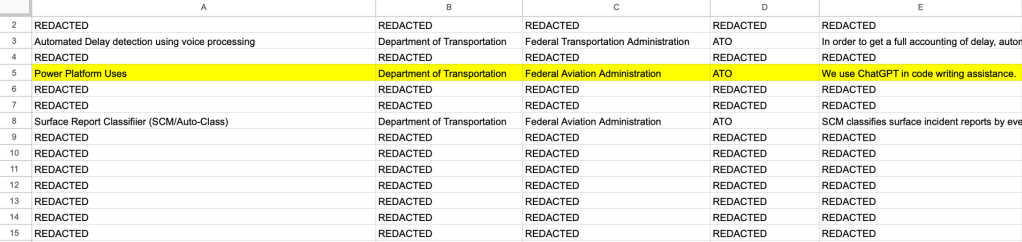

The clarification comes after the Department of Transportation earlier listed ChatGPT in a catalog of current AI use cases published on the department’s website. In that reference, the agency said the FAA — and in particular, the agency’s air traffic organization (ATO) — was using “ChatGPT in code writing assistance.”

The file noted that the ChatGPT use case had been in production for less than a year and that ATO had not used agency training in its described work with the chatbot.

The FAA said: “The FAA does NOT use Chat GPT in any of its systems, including air traffic systems. The entry was made in error and has been updated.”

Following FedScoop’s inquiry, DOT removed reference to the chatbot from its website. The update to the list comes amid concerns over agencies’ approach to cataloging artificial intelligence use cases and potential safety issues arising from deploying the technology in mission-critical systems.

FedScoop reached out to OpenAI for comment.

In a report published in December, academics at Stanford University warned that as many as half of federal government departments had yet to provide a complete inventory of AI use cases.

According to an executive order issued by the Trump administration, federal government agencies are required to make available an inventory of non-classified and non-sensitive artificial intelligence use cases.

In a brief published last month, the Government Accountability Office noted that in addition to safety concerns, generative AI tools raise serious privacy and national security issues.

Responding to emailed questions from FedScoop, Hammond Pearce, a computer science and engineering lecturer at the University of New South Wales, explained observable risks associated with the use of ChatGPT for producing code.

“We have in our own research group measured these kinds of models (including ChatGPT) for producing buggy or insecure code, and we’ve found numerous instances of each,” Pearce said.

He added: “It would be an obvious concern if the agency or agency programmers were adopting and using the code without properly testing it, especially if the target application is safety-critical (which, for the FAA, might be the case).”