US AI Safety Institute taps Scale AI for model evaluation

The U.S. AI Safety Institute has selected Scale AI as the first third-party evaluator authorized to assess AI models on its behalf, opening a new channel for testing.

That agreement will allow a broader range of model builders to access voluntary evaluation, according to a Scale AI release shared with FedScoop ahead of the Monday announcement. Participating companies will be able to test their models once and, if they choose, share those results with AI safety institutes around the world.

Criteria for those evaluations will be developed jointly by the AI data labeling company and the AISI. For Scale’s part, that work will be led by its research arm, the Safety, Evaluation, and Alignment Lab, or SEAL. Per the announcement, the evaluations will look at performance in areas such as math, reasoning, and AI coding.

“SEAL’s rigorous evaluations set the standard for how cutting-edge AI systems meet the highest standards,” Summer Yue, director of research at Scale AI, said in a statement. “This agreement with the U.S. AISI is a landmark step, providing model builders an efficient way to vet the technology before reaching the real world.”

Thus far, the voluntary testing out of the AI Safety Institute, which is housed in the Department of Commerce, has been with large-scale AI companies. OpenAI and Anthropic formally signed testing agreements with the safety body in August that would allow the AISI to access new models prior to and after their release for testing and risk mitigation. In November, the AISI and its counterpart in the U.K. released their first joint evaluation of Anthropic’s Claude 3.5 Sonnet.

In its announcement, Scale AI called the agreement a “new phase in public-private sector collaboration” and highlighted the ability for companies to choose whether they want to share their test results with the AISI. Without third-party testing, the release said, governments would need to spend time and money building out their own testing infrastructure and still wouldn’t be likely to meet the growing demand.

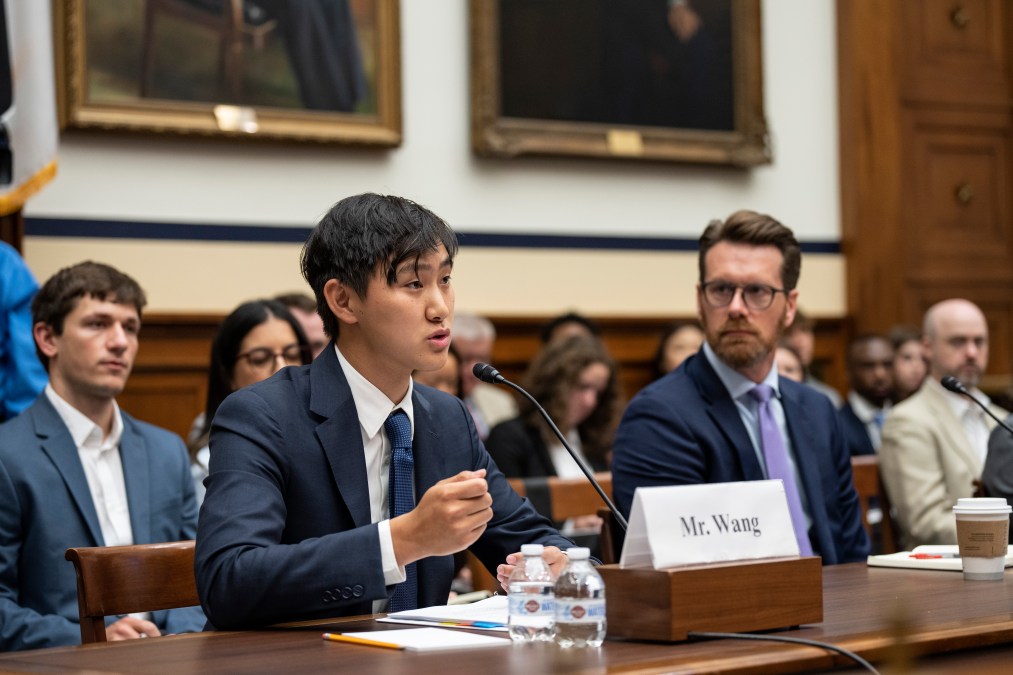

The announcement comes as AI leaders across the world are set to gather in Paris for the Artificial Intelligence Action Summit this week, including Scale AI’s CEO, Alexandr Wang.

Scale AI is a San Francisco-based company that primarily provides training data for AI and helps other companies manage their models. It also has prior experience working with the government on testing and evaluation. Last year, the Department of Defense selected Scale AI for a one-year contract to come up with a way to test and evaluate its large language models.