Bipartisan House bill asks agencies to label AI-generated content

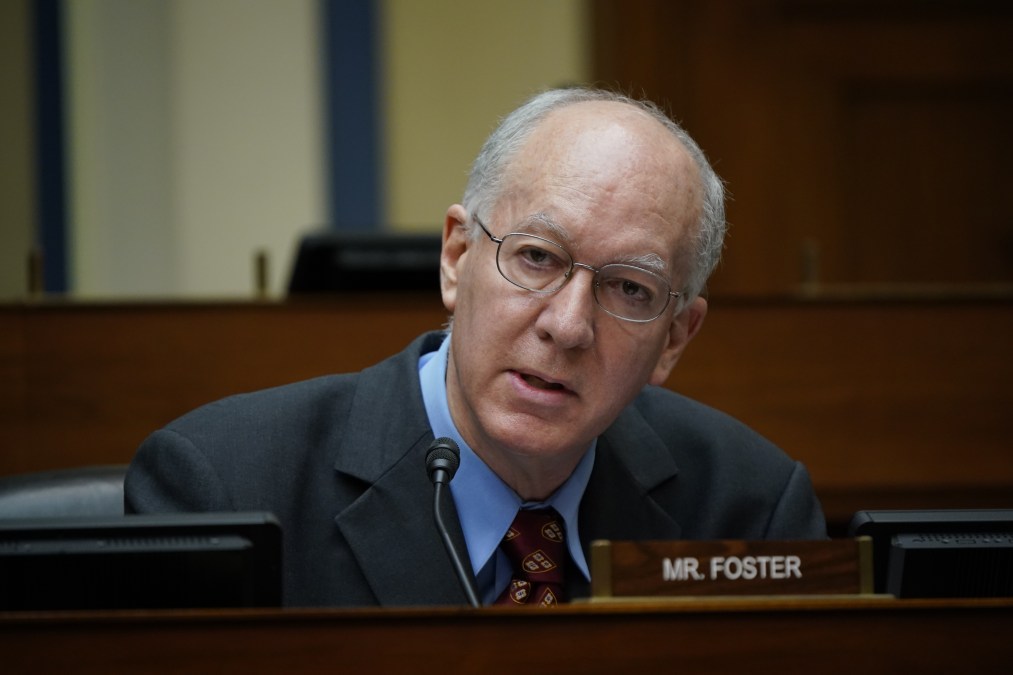

Federal agencies would be blocked from distributing AI-generated content without disclosures or review under a new bipartisan bill introduced Wednesday by Reps. Bill Foster, D-Ill., and Pete Sessions, R-Texas.

The Responsible and Ethical AI Labeling (REAL) Act isn’t looking to restrict government AI use; rather, it would prohibit AI-generated content from circulating without clear labels or human oversight. Text, images, audio and video would be subject to the rules.

“In an age of disinformation, Americans should be able to trust that information coming from official government sources is legitimate and based in reality,” Foster said in a statement accompanying the bill text Wednesday. “There must be clear guidelines to ensure those at our federal agencies and in our nation’s highest office are not using AI in a way that could purposefully or inadvertently mislead the American public.”

Sessions echoed the sentiment, characterizing the legislation as a “commonsense step to maintain trust and strengthen transparency in government communications.”

Exceptions are outlined in the legislation, such as for communication not intended for public release and content created for any classified purpose. If the AI-generated content has been reviewed by agency staff prior to publication, it is excluded from the disclosure requirement as well.

AI-generated content disclosures have gained ground internationally. South Korea approved a label law this week for AI-generated advertising content, pointing to the risk to consumers. Europe is also trying to address the growing issue by including requirements for AI-generated content labels in its AI Act.

Similar legislation has been introduced at the state and federal level previously, though, success has been minimal. The Senate’s bipartisan COPIED Act, which aimed to address a lack of federal transparency guidelines for AI-generated content, appears to have stalled out after getting introduced in April, for example.

As lawmakers continue to pursue label-focused laws, the effectiveness of disclosures has been questioned.

“We found that adding labels changes people’s perceptions of whether the content was authored by AI or a human — suggesting that labels improve transparency — but there was no evidence that these labels significantly change the persuasiveness of the content,” researchers from Stanford’s Institute for Human-Centered Artificial Intelligence said in a report published this summer.

The researchers conducted a randomized experiment with 1,500 U.S. participants and compared responses to unlabeled and labeled AI-generated policy messages across domains.

“Policymakers should continue to consider the potential positive impacts of AI content label regulations and standards,” the researchers said in the report. “But if the policy goal is to decrease the persuasive effect of AI-generated messages, labels may not be the complete solution.”