- Sponsored

- Insights

Government’s AI inflection point: Why on-prem tech modernization matters

The increasing adoption of artificial intelligence across federal agencies represents the most significant technology disruption in a generation, placing government leaders at a critical inflection point.

From a leadership perspective, this isn’t just a technological shift; AI is forcing a fundamental reimagining of how government operates, interacts with citizens, and safeguards national security. How well agencies prepare for that impact will, in many ways, be determined by the steps leaders take now and in the coming months to embrace AI and develop the capacity to manage it.

That involves many considerations, but one factor crucial to those efforts is ensuring that agencies have the foundational infrastructure and flexibility necessary to effectively leverage AI to support their operations, employees and the American public.

The cloud’s limits in the AI era: A new paradigm

For the past two decades, the cloud has played a crucial role in democratizing and accelerating access to modernized technologies. However, the unique demands of AI — and the changing nature of data collection and processing — are forcing leaders to take a new and more nuanced approach to their infrastructure investments. There are several reasons:

First, sending massive datasets across the Internet for AI processing in remote cloud environments is becoming increasingly expensive and time-consuming. The sheer volume of data generated and consumed by AI applications, coupled with the need for real-time processing and analysis, contributes to escalating costs, exacerbates latency concerns and hinders critical decision-making and operational efficiency.

Second, enterprises continue to collect and analyze more information than ever before from users and devices at the edge of their operations, placing high-performance computing demands farther away from the cloud in environments where, often, there is no high-bandwidth connectivity.

Third, the need to maintain data sovereignty, confidentiality and compliance as AI workloads grow will become increasingly complex and dynamic. That will make private on-premises AI-enabled enterprise clouds essential in agencies’ hybrid multi-cloud mix.

In all likelihood, AI workloads will become the principal workload driving government operations by 2030. That will require agencies to plan now for accelerated, AI-ready infrastructure on-premises that works alongside cloud services.

Reassessing hardware: The engine of AI innovation

Planning for an AI future requires a fundamental rethinking of data processing hardware. Agencies must move beyond general-purpose processors and embrace specialized hardware solutions designed to handle AI’s unique demands. That includes looking more closely at:

- Purpose-built AI accelerators: While AI workloads increasingly require high-performance computing capability, not every AI workload requires a fleet of graphic processors. CPUs with built-in AI accelerators like Intel’s Xeon processors with P-Cores, or specially designed inference accelerators like Intel’s Gaudi3, are game changers. These chips are specifically designed for inference workloads, delivering exceptional performance and energy efficiency, especially at the network edge. Enterprise IT procurement teams increasingly see the value of focusing on FLOPS (floating point operations) per watt rather than FLOPS per second in evaluating their hardware buys and total cost of ownership. Agencies can achieve significant cost savings and a smaller environmental footprint by optimizing their infrastructure for overall performance and sustainability.

- Specialized networking infrastructure: To effectively handle the massive data flows generated by AI applications, agencies also need to ensure they are investing appropriately in specialized networking infrastructure that can connect pools of data from users, devices, and systems to the cloud and on-premises data centers in a unified, secure fashion.

Building an AI-ready enterprise: Partnering for success

Finally, agencies can get a head start determining how best to build an AI-ready enterprise by looking at the work other agencies and organizations have accomplished with the help of Hewlett Packard Enterprise. With decades of experience in high-performance computing, HPE is uniquely positioned to help government agencies plan for AI’s intensive computing demands.

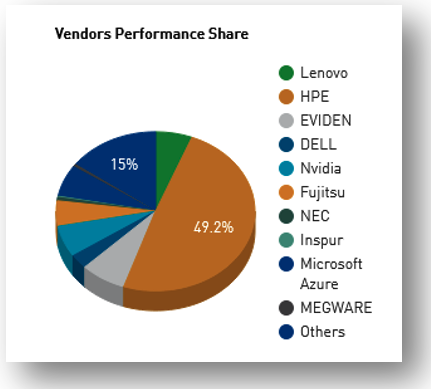

HPE systems power seven of the top ten fastest supercomputers in the world, as listed on the TOP500 list and 49% of the operational capability of the world’s top 500 supercomputers. HPE’s and Intel’s recent delivery of the Aurora exascale supercomputer for the U.S. Department of Energy’s Argonne National Laboratory demonstrates HPE’s expertise in designing, manufacturing, installing and managing AI-optimized infrastructure to meet virtually any requirement.

HPE also leads in energy efficiency. Four of the top ten most energy-efficient supercomputers run on HPE hardware. Moreover, HPE’s work with government agencies and academic research institutions and its affinity for public-private partnerships reflect its expertise in meeting government agencies’ unique requirements.

The AI revolution is upon us. Agency leaders must ensure their IT investment plans fully reflect the latest thinking on what an AI-ready environment looks like and what’s required to get there.

Learn more about how Hewlett Packard Enterprise and Intel can help your agency modernize more effectively for the AI era.