At GTC, we unveiled Volta, our greatest generational leap since the invention of CUDA. It incorporates 21 billion transistors. It’s built on a 12nm NVIDIA-optimized TSMC process. It includes the fastest HBM memories from Samsung. Volta features a new numeric format and CUDA instruction that perform 4×4 matrix operations–an elemental deep learning operation–at super-high speeds.

Each Volta GPU is 120 teraflops. And our DGX-1 AI supercomputer interconnects eight Tesla V100 GPUs to generate nearly one petaflops of deep learning performance.

Google’s TPU

Also last week, Google announced at its I/O conference, its TPU2 chip, with 45 teraflops of performance.

It’s great to see the two leading teams in AI computing race while we collaborate deeply across the board–tuning TensorFlow performance, and accelerating the Google cloud with NVIDIA CUDA GPUs. AI is the greatest technology force in human history. Efforts to democratize AI and enable its rapid adoption are great to see.

Powering Through the End of Moore’s Law

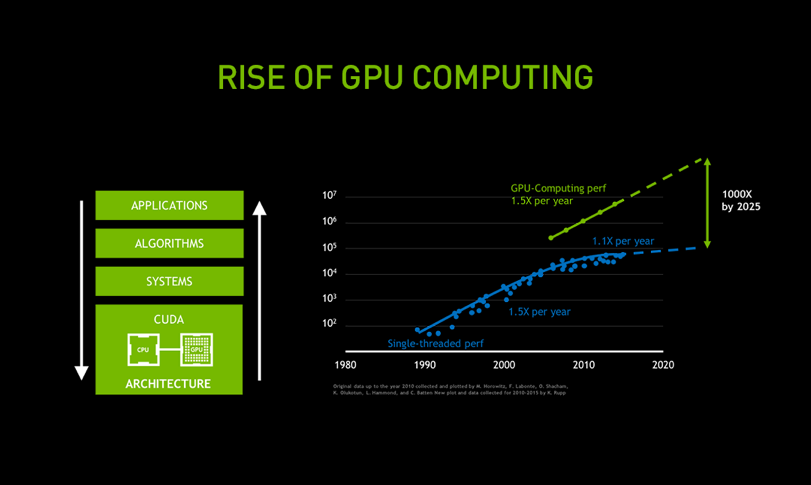

As Moore’s law slows down, GPU computing performance, powered by improvements in everything from silicon to software, surges.

The AI revolution has arrived despite the fact Moore’s law–the combined effect of Dennard scaling and CPU architecture advance–began slowing nearly a decade ago. Dennard scaling, whereby reducing transistor size and voltage allowed designers to increase transistor density and speed while maintaining power density, is now limited by device physics.

CPU architects can harvest only modest ILP–instruction-level parallelism–but with large increases in circuitry and energy. So, in the post-Moore’s law era, a large increase in CPU transistors and energy results in a small increase in application performance. Performance recently has increased by only 10 percent a year, versus 50 percent a year in the past.

The accelerated computing approach we pioneered targets specific domains of algorithms; adds a specialized processor to offload the CPU; and engages developers in each industry to accelerate their application by optimizing for our architecture. We work across the entire stack of algorithms, solvers and applications to eliminate all bottlenecks and achieve the speed of light.

That’s why Volta unleashes incredible speedups for AI workloads. It provides a 5X improvement over Pascal, the current-generation NVIDIA GPU architecture, in peak teraflops, and 15X over the Maxwell architecture, launched just two years ago-–well beyond what Moore’s law would have predicted.

Accelerate Every Approach to AI

A sprawling ecosystem has grown up around the AI revolution.

Such leaps in performance have drawn innovators from every industry, with the number of startups building GPU-driven AI services growing more than 4x over the past year to 1,300.

No one wants to miss the next breakthrough. Software is eating the world, as Marc Andreessen said, but AI is eating software.

The number of software developers following the leading AI frameworks on the GitHub open-source software repository has grown to more than 75,000 from fewer than 5,000 over the past two years.

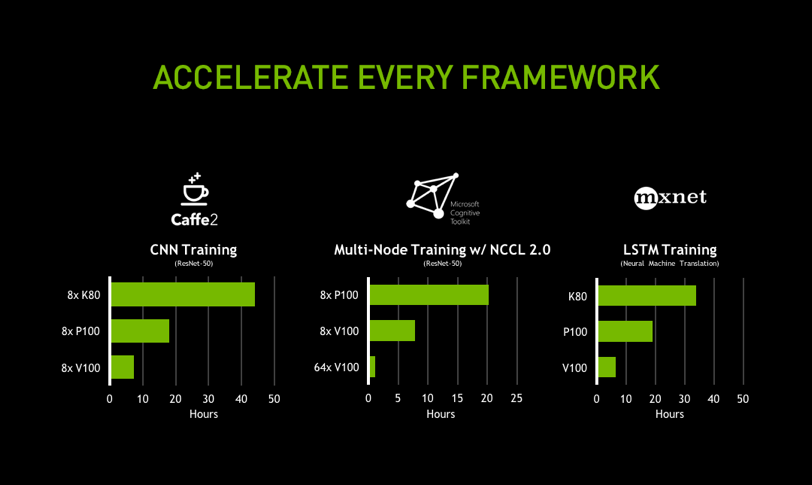

The latest frameworks can harness the performance of Volta to deliver dramatically faster training times and higher multi-node training performance.

Deep learning is a strategic imperative for every major tech company. It increasingly permeates every aspect of work from infrastructure and tools to how products are made. We partner with every framework maker to wring out the last drop of performance. By optimizing each framework for our GPU, we can improve engineer productivity by hours and days for each of the hundreds of iterations needed to train a model. Every framework–Caffe2, Chainer, Microsoft Cognitive Toolkit, MXNet, PyTorch, TensorFlow–will be meticulously optimized for Volta.

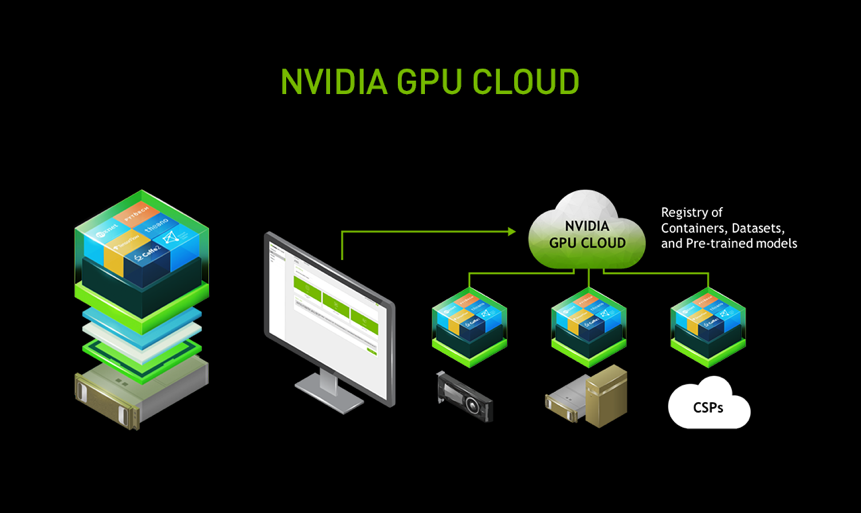

The NVIDIA GPU Cloud platform gives AI developers access to our comprehensive deep learning software stack wherever they want it—on PCs, in the data center or via the cloud.

We want to create an environment that lets developers do their work anywhere, and with any framework. For companies that want to keep their data in-house, we introduced powerful new workstations and servers at GTC.

Perhaps the most vibrant environment is the $247 billion market for public cloud services. Alibaba, Amazon, Baidu, Facebook, Google, IBM, Microsoft and Tencent all use NVIDIA GPUs in their data centers.

To help innovators move seamlessly to cloud services such as these, at GTC we launched the NVIDIA GPU Cloud platform, which contains a registry of pre-configured and optimized stacks of every framework. Each layer of software and all of the combinations have been tuned, tested and packaged up into an NVDocker container. We will continuously enhance and maintain it. We fix every bug that comes up. It all just works.

A Cambrian Explosion of Autonomous Machines

Deep learning’s ability to detect features from raw data has created the conditions for a Cambrian explosion of autonomous machines–IoT with AI. There will be billions, perhaps trillions, of devices powered by AI.

At GTC, we announced that one of the 10 largest companies in the world, and one of the most admired, Toyota, has selected NVIDIA for their autonomous car.

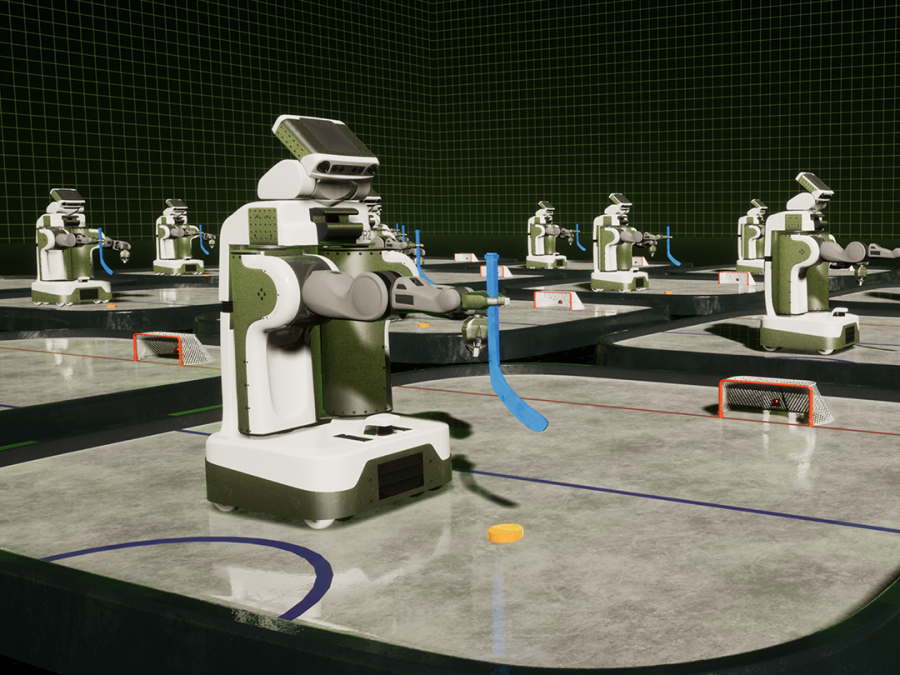

We also announced Isaac, a virtual robot that helps make robots. Today’s robots are hand programmed, and do exactly and only what they were programmed to do. Just as convolutional neural networks gave us the computer vision breakthrough needed to tackle self-driving cars, reinforcement learning and imitation learning may be the breakthroughs we need to tackle robotics.

Once trained, the brain of the robot would be downloaded into Jetson, our AI supercomputer in a module. The robot would stand, adapt to any differences between the virtual and real world. A new robot is born. For GTC, Isaac learned how to play hockey and golf.

Finally, we’re open-sourcing the DLA, Deep Learning Accelerator–our version of a dedicated inferencing TPU–designed into our Xavier superchip for AI cars. We want to see the fastest possible adoption of AI everywhere. No one else needs to invest in building an inferencing TPU. We have one for free–designed by some of the best chip designers in the world.

Enabling the Einsteins and Da Vincis of Our Era

These are just the latest examples of how NVIDIA GPU computing has become the essential tool of the da Vincis and Einsteins of our time. For them, we’ve built the equivalent of a time machine. Building on the insatiable technology demand of 3D graphics and market scale of gaming, NVIDIA has evolved the GPU into the computer brain that has opened a floodgate of innovation at the exciting intersection of virtual reality and artificial intelligence.

Learn the latest from NVIDIA on AI and Deep Learning in our newsletter.