NASA appears to step back from the term ‘artificial general intelligence’

The terminology NASA once used to refer to artificial general intelligence has changed, the space agency said in response to questions from FedScoop about emails obtained through a public records request, signaling the ways that science-focused federal agencies might be discussing emerging technologies in the age of generative AI.

Building artificial general intelligence — a powerful form of AI that could theoretically rival humans — is still a distant goal, but remains a key objective of companies like OpenAI and Meta. It’s also a topic that remains hotly contested and controversial among technology researchers and civil society, and one that some feel could end up distracting from more immediate AI risks, like bias, privacy, and cybersecurity.

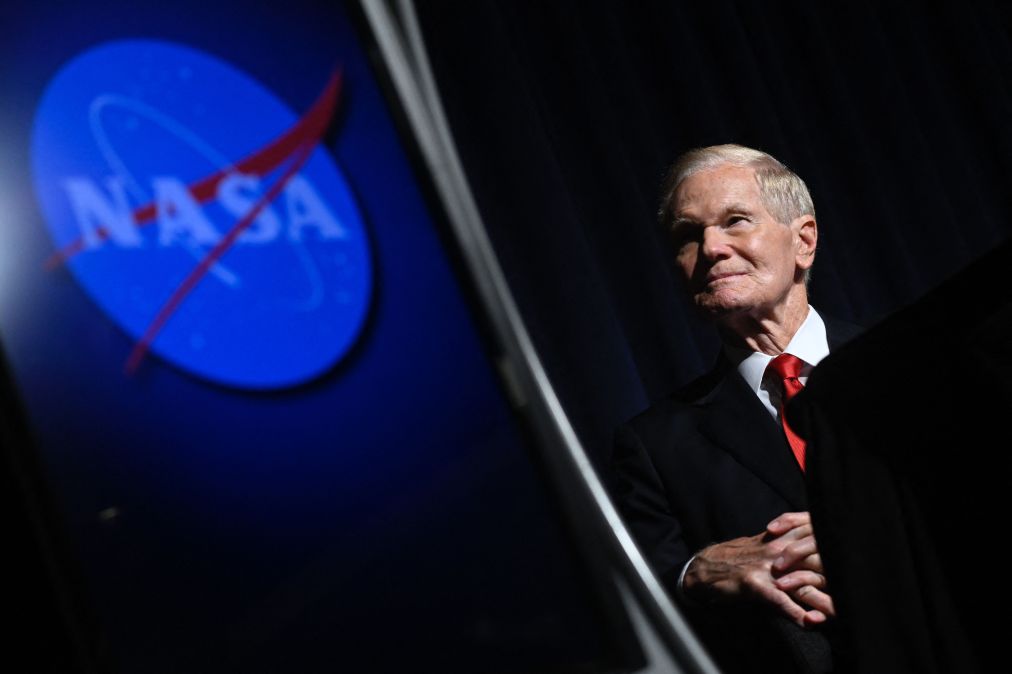

NASA is one of the few government agencies that’s expressed any particular interest in AGI issues. Many federal agencies remain focused on more immediate applications of AI, such as using machine learning to process documents. Jennifer Dooren, NASA’s deputy news chief, said in a statement to FedScoop that the agency is “committed to formalizing protocols and processes for AI usage and expanding efforts to further AI innovations across the agency.”

A framework for the ethical use of artificial intelligence published by the space agency in April 2021 made reference to both artificial general intelligence and artificial super intelligence. In response to FedScoop questions about the status of this work, NASA said “the terminology of AI” has changed, pointing to the agency’s handling of generative artificial intelligence, which typically includes the kind of large language models that fuel systems like ChatGPT. (Whether systems like ChatGPT eventually serve as a foundation for AGI remains up for debate among researchers.)

“NASA is looking holistically at Artificial Intelligence and not just the subparts,” Dooren said. “The terms from this past framework have evolved. For example, the terms AGI and ASI could now be viewed as generative AI (genAI) today.”

The agency also highlighted a new working group focused on ethical artificial intelligence and NASA’s work to meet goals outlined in President Joe Biden’s AI executive order from last October. The space agency also hosted a public town hall on its AI capabilities last month.

But the apparent retreat from the term artificial general intelligence is notable, given some of the futuristic concerns outlined in the 2021 framework. One goal outlined in the document, for instance, was to “set the stage for successful, peaceful, and potentially symbiotic coexistence between humans and machines.” The framework noted that while AGI had not yet been achieved, there was growing belief that there could be a “tipping point” in AI capabilities that would fundamentally change how humans interact with technology.

Experts sometimes split artificial intelligence into several categories: artificial narrow intelligence, or AI use cases designed with specific applications in mind, and artificial general intelligence, referring to AI systems that could match capabilities of human users. NASA’s framework also refers to artificial super intelligence, which would represent AI capabilities that “surpass” human capabilities.

The document stipulated that NASA should be an “early adopter” of national and global best practices in regard to these advanced technologies. It noted that many AI systems won’t advance to the level of AGI or ASI, but still encouraged NASA to consider the potential impacts of these technologies. Many of the considerations outlined in the report appear to be far off, but range from analyzing the possibility of encoding morality in advanced AI systems (a potentially impossible task) or “merging” astronauts with artificial intelligence.

“Creating a perfect moral code that works in all cases is still an elusive task and must be pursued by NASA experts in conjunction with other national or global experts,” the document stated. “As humans pursue long term space flight, technology may advance to a point where it would be necessary to consider the benefits and impacts of melding humans and AI machines, most notably adaptations that allow survivability during long duration space flight, but challenges if returning to Earth.”

NASA’s interest in studying the ramifications of AGI, as part of this framework, were also discussed in an email obtained by FedScoop earlier this year.